Securing Kubernetes using Pod Security Policy Admission Controller

Kubernetes has unquestionably become the leading open-source platform for orchestrating and managing containerized applications and services. One of the many reasons for its success has been its focus on security. However, while it is true that Kubernetes comes with a plethora of built-in security tools and features, it is also true that most of them have to be manually enabled by administrators at installation time.

In that regard, this tutorial’s objective is to explore the capabilities of one of the most powerful controllers available to K8s administrators, the Pod Security Policy admission controller.

Admission Controllers 101

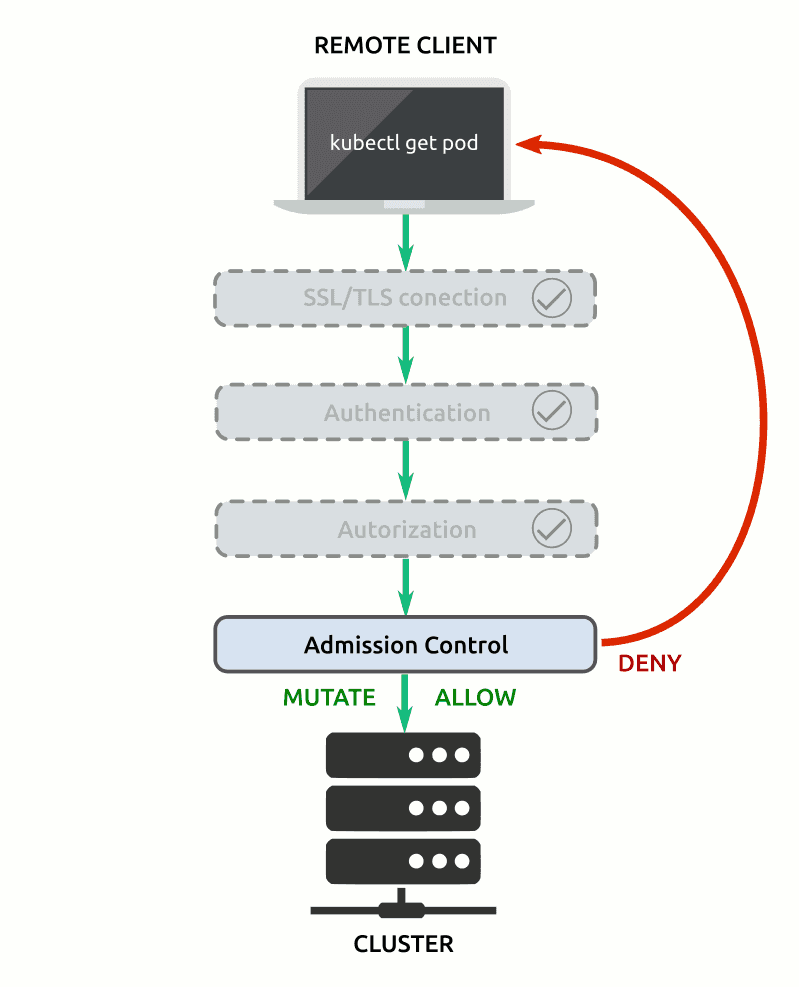

By design, each call made to Kubernetes’ API goes through a sequential process of authentication and authorization. It means that regardless if it’s a human user or a service account, both checks will always occur.

On the other hand, Admission Controllers are optional (but strongly recommended) plug-ins that are compiled into the kube-apiserver binary with the objective to broaden security options. Admission controllers intercept requests after they pass the authentication and authorization checks and then executes its code just before the object persistence.

From a security perspective, it’s important to understand some aspects related to admission controllers:

- While the outcome of either authentication or authorization check is a boolean (allow or deny the request), admission controllers are more diverse. Some admission controllers validate requests, some mutate requests, and some can do both. When supported, admission controllers first mutate and then validate the request. If the request fails either the mutating phase or the validating phase the entire request is denied.

- Admission controllers can be enabled or disabled by either editing the

enable-admission-pluginsflag in the K8s API server manifest (common in self-hosted deployments) or by editing the corresponding systemd unit file (when deployed as a systemd service).

Although admission controllers are optional, some might necessary for particular use cases. As stated in the Kubernetes official documentation: Many advanced features in Kubernetes require an admission controller to be enabled in order to properly support the feature. That explains why some cluster bootstrapping methods, as kubeadm, activate by default a few admission controllers.

To verify which admission controllers are enabled in your system, you can run the following command as the administrator user:

kube-apiserver -h | grep enable-admission-plugins

The next section will explain what is the PodSecurityPolicy admission controller, how it works, and how it can be used to enhance cluster security.

Pod Security Policy Admission Controller

Once enabled, the PodSecurityPolicy admission controller validates all requests related to creating or updating pods. In that regard, the PodSecurityPolicy admission controller uses the policies previously created by the cluster administrator.

In other words, since Pod Security Policies are applied at a cluster-level, once they are active only pods that comply with the established Pod Security Policies (PSP) will be created or updated.

Keep that piece of information in mind before attempting to use this important admission controller since users and services accounts will not be allowed to create or update pods until they comply with Pod Security Policy admission controller validation.

The validation process used by the PodSecurityPolicy admission controller consists of two steps:

- Pod Security Policies Check. Each request made to the cluster is compared with the Pod Security Policies (PSP) already created in the system. These policies are read in alphabetical order until one of them matches the request made to the API server, in which case it’s approved. If no policy matches the request then the whole request will be rejected and an error message will be sent to the user.

- Role-Based Access Control (RBAC) Check. In order to pass the Pod Security Policy validation, the user or service account issuing the API request must have proper authorization to use such a policy.

The combination of PSPs and RBAC authorization allows cluster administrators to create fine-grained security policies for each user and/or service account. Moreover, Pod Security Policies allow cluster admins to set default values of security-related pod specifications. The implications are endless. Thanks to Pod Security Policies K8s admins have total control over key container security aspects such as:

- The ability of pods/containers to access the host’s file system

- The volume types that pods/containers can use

- The ability of containers to run with root privileges as well as its ability to use privilege escalation

- Host’s networking resources and configurations that pods/containers can use

- The ability to create a white list of

Flexvolumedrivers - The ability to determine which host

namespacescan be used - Setting up the requirements for using a determined read-only root file system

- The possibility to specify which users and group IDs are allowed in the container

- Setting up default Linux security capabilities, such as SELinux context, AppArmor, seccomp, and sysctl profile

- The allocation of an

FSGroupowning the pod’s volumes - The ability to specify allowed Proc Mount types for the container

Pod Security Policies In Action

The best way to illustrate how the PodSecurityPolicy admission controller works is to see it in action. Let’s say you need a Kubernetes cluster using two different Pod Security Policies, one fully restrictive policy for the default namespace and a totally relaxed policy on the dmz namespace.

For instance, the default namespace could be restricted in such a way that no user or service account would be allowed to create or update pods unless explicitly authorized to do so. Conversely, in the dmz namespace, any user or service account (with proper RBAC permissions) would be able to create or update pods freely.

In order to test such a Kubernetes environment you would need:

- A new Kubernetes installation. You can use MicroK8s, MiniKube, o any other cluster testing solution you consider adequate

- A

namespacecalled dmz - A super administrator user. The default Kubernetes superuser is more than enough

- A non-administrator user with the

editrole assigned for both namespaces, default, and dmz. For the purpose of this example, we will use a user calledk8user - Proper kubeconfig setup so both users can issue

kubectlcommands as needed. For the purpose of this example, the kubeconfig ofk8userwill be saved at/home/k8user/.kube/config-k8user

Once ready, you can start by using the k8user to create a new nginx pod in the default namespace with the following command:

kubectl --kubeconfig=/home/k8user/.kube/config-k8user --namespace=default run nginx-no-psp --image=nginx --port=80 --restart=Never

List the pods in the default namespace to check it was created:

kubectl --kubeconfig=/home/k8user/.kube/config-k8user --namespace=default get pods

You should see an output similar to this:

NAME READY STATUS RESTARTS AGE

nginx-no-psp 1/1 Running 0 9m58s

Next, create a new directory in your local machine to save all necessary files:

mkdir ~/policies

Using your favorite text editor, create a new pod security policy file:

nano ~/policies/deny-all.yaml

Now, paste the following content into the file:

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: deny-all

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default'

apparmor.security.beta.kubernetes.io/allowedProfileNames: 'runtime/default'

seccomp.security.alpha.kubernetes.io/defaultProfileName: 'docker/default'

apparmor.security.beta.kubernetes.io/defaultProfileName: 'runtime/default'

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

# This is redundant with non-root + disallow privilege escalation,

# but we can provide it for defense in depth.

requiredDropCapabilities:

- ALL

# Allow core volume types.

volumes:

- 'configMap'

- 'emptyDir'

- 'projected'

- 'secret'

- 'downwardAPI'

# Assume that persistentVolumes set up by the cluster admin are safe to use.

- 'persistentVolumeClaim'

hostNetwork: false

hostIPC: false

hostPID: false

runAsUser:

# Require the container to run without root privileges.

rule: 'MustRunAsNonRoot'

seLinux:

# This policy assumes the nodes are using AppArmor rather than SELinux.

rule: 'RunAsAny'

supplementalGroups:

rule: 'MustRunAs'

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

fsGroup:

rule: 'MustRunAs'

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

readOnlyRootFilesystem: false

Save and close the file.

Let’s take a moment to analyze the pod security policy.

- The policy explicitly denies using the root user as well as escalating user’s permissions to root

- The policy specifies which volumes types can be used

- The policy explicitly denies using the host’s resources

- The policy also forbids adding root group

This policy is good enough to prevent pods from running with root permissions, as well as using inappropriate volume types. In order to implement the policy, you will need to create it in the cluster.

Switch to the administrator user, and create the deny-all policy using the following command:

kubectl create -f ~/policies/deny-all.yaml

For demonstration purposes, no RBAC authorization for the policy will be created yet.

Activate the PodSecurityPolicy admission controller by editing the kube-apiserver manifest. Look for the --enable-admission-plugins flag. Add the PodSecurityPolicy to the list of active admission controllers. The corresponding line should look similar to:

--enable-admission-plugins=<comma-separated list of active admission controllers>,PodSecurityPolicy

Save and close the kube-apiserver manifest file and reload the API server daemon to apply the changes.

Once you are done, try to create another nginx pod using the k8user:

kubectl --kubeconfig=/home/k8user/.kube/config-k8user --namespace=default run nginx-01 --image=nginx --port=80 --restart=Never

You will get an error message similar to this one:

Error from server (Forbidden): pods "nginx-01" is forbidden: unable to validate against any pod security policy: []

Now, try creating the same pod using the administrator user:

kubectl --namespace=default run nginx-01 --image=nginx --port=80 --restart=Never

You will get the message pod/nginx-01 created, however, before continuing, list the pods using the command:

kubectl get pods

You will see an output similar to this:

NAME READY STATUS RESTARTS AGE

nginx-01 0/1 CreateContainerConfigError 0 10m

nginx-no-psp 1/1 Running 0 52m

Even when no initial error was issued, the pod was not created. Inspect the pod to check what caused the error:

kubectl describe pod nginx-01

By the end of the pod description, you will notice the message: “Error: container has runAsNonRoot and image will run as root”

Warning Failed 11m (x6 over 13m) kubelet, u18s1 Error: container has runAsNonRoot and image will run as root

How is that possible? And why each user get a different error?

The explanation has to do with how the pod security policies work:

- When

k8userissued the command, the PSP admission controller rejected the request because the user had no rights to use the policy. - In the case of the superuser, it has enough rights to perform any action within the cluster. However, once the container was mutated by the PSP controller, it was unable to run because the nginx image was built to run with root privileges.

To continue with the example, let’s create another PSP for the dmz namespace. Using your favorite text editor, create the corresponding policy file in your local machine:

nano ~/policies/allow-all.yaml

Paste the following content into the file:

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: allow-all

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: '*'

spec:

privileged: true

allowPrivilegeEscalation: true

allowedCapabilities:

- '*'

volumes:

- '*'

hostNetwork: true

hostPorts:

- min: 0

max: 65535

hostIPC: true

hostPID: true

runAsUser:

rule: 'RunAsAny'

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'RunAsAny'

fsGroup:

rule: 'RunAsAny'

As you can see, this policy is quite the opposite of the first one, thus is named allow-all. In practice, this Pod Security Policy set no limits to any pod aspect. Is the closest to not having PSP at all.

Now, create the PSP resource in the cluster by issuing:

kubectl create -f ~/policies/allow-all.yaml

List Pod Security Policies, so you can compare both:

kubectl get psp

You will see an output similar to this one:

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

allow-all true * RunAsAny RunAsAny RunAsAny RunAsAny false *

deny-all false RunAsAny MustRunAsNonRoot MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

Next, create a cluster role and role binding for the new policy. Using your favorite text editor create the following file:

nano ~/policies/allow-all-rbac.yaml

Paste the following content into the file:

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: allow-all-clusterrole

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames:

- allow-all

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: allow-all-rolebinding

namespace: dmz

roleRef:

kind: ClusterRole

name: allow-all-clusterrole

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

apiGroup: rbac.authorization.k8s.io

name: system:authenticated

Save and close the file. Create the resource in the cluster using the command:

kubectl create -f ~/policies/allow-all-rbac.yaml

Now that both, the policy and the RBAC, are in place try creating an nginx pod in the dmz namespace using the k8user:

kubectl --kubeconfig=/home/k8user/.kube/config-k8user --namespace=dmz run nginx-01 --image=nginx --port=80 --restart=Never

List the pods to check it was properly created:

kubectl --kubeconfig=/home/k8user/.kube/config-k8user --namespace=dmz get pods

You will see an output similar to this one:

NAME READY STATUS RESTARTS AGE

nginx-01 1/1 Running 0 14s

This time everything worked as expected. The user had proper authorization to use the allow-all policy, and the policy itself did not prevent the vanilla nginx image to run as root.

Wrapping Up

By using the PodSecurityPolicy admission controller, Kubernetes admins gain the ability to control the security parameters of pods specifications. Therefore an additional security layer is created since no pod will be created or updated without passing Pod Security Policies scrutiny.