Safe Practices on Running Containers in Kubernetes

Kubernetes has become the de-facto standard for deploying containerized applications at scale in private, public, and hybrid cloud environments. However, this amazing technology is complicated and is getting more complicated each day. But in spite of its complication “The juice is worth the squeeze”.

Containers, Containers, Containers

One of the best ways to improve the performance, security, and reliability of Kubernetes clusters is by building an application image as perfect as possible. Docker makes building containers a breeze. Just put a standard Dockerfile into your folder, run the docker build command, and shazam! Your container image is ready!

The downside of this simplicity is that it’s easy to build huge containers full of things you don’t need—including potential security holes.

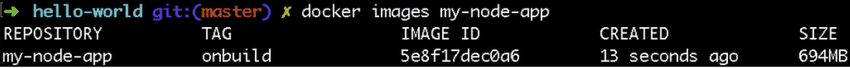

FROM node:onbuild

EXPOSE 8080

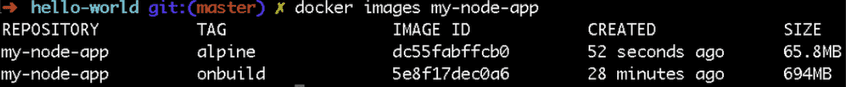

One of the things that you can do to reduce the size of your app image (app written in interpreted languages) is to use a smaller base image such as Alpine.

FROM node:alpine

WORKDIR /app

COPY package.json /app/package.json

RUN npm install --production

COPY server.js /app/server.js

EXPOSE 8080

CMD npm start

Alpine Linux is a small and lightweight Linux distribution that is very popular with Docker users because it’s compatible with many apps while still keeping containers images small.

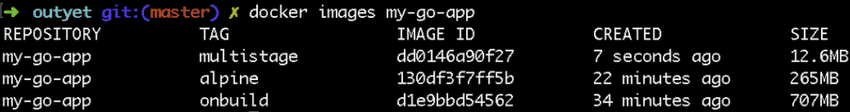

Optimizing build for compiled languages like GoLang

With Docker’s support for multi-step builds, you can easily ship just the binary with a minimal scaffolding.

FROM golang:alpine AS build-env

WORKDIR /app

ADD . /app

RUN cd /app && go build -o goapp

FROM alpine

RUN apk update && \

apk add ca-certificates && \

update-ca-certificates && \

rm -rf /var/cache/apk/*

WORKDIR /app

COPY --from=build-env /app/goapp /app

EXPOSE 8080

ENTRYPOINT ./goapp

Would you look at that! This container is only 12MB in size!

NOTE: One can also use a scratch image, which is zero bytes, to further reduce the size of the app image.

Privileged vs. Non-Privileged Containers

Privileged containers are defined as any container where the container uid 0 is mapped to the host’s uid 0.

All processes in containers run as the root user (uid 0) by default. To prevent the potential compromise of container hosts, it is essential to specify a non-root and a least-privileged user ID when building the container image and ensuring that all application containers are non-privileged containers.

ENV USER=appuser

ENV UID=10001

RUN adduser \

--disabled-password \

--gecos "" \

--home "/nonexistent" \

--shell "/sbin/nologin" \

--no-create-home \

--uid "${UID}" \

"${USER}"

(See https://stackoverflow.com/a/55757473/12429735)

NOTE: Your containers should do one thing well see distroless

Namespaces, Namespaces, Namespaces

Use Namespaces, always. As containers are to processes, Namespaces are to Kubernetes projects. Apart from the security boundary that Namespaces convey, they’re an excellent way to partition your work, and they yield an excellent way to reset or delete it.

Creating a Namespace can be done with a single command. If you wanted to create a Namespace called ‘test’ you would run:

kubectl create namespace test

You can also specify a Namespace in the YAML declaration:

apiVersion: v1

kind: Namespace

metadata:

name: awesome-project

Kubectl apply vs. Kubectl create

Apply Creates and Updates Resources in a cluster through running kubectl apply on Resource Config. It also manages complexity, such as the ordering of operations and merging user-defined and cluster-defined state.

kubectl apply -f awesome.yaml

However, it’s tempting to get a quick-hit with kubectl create namespace awesome-project, but after several such commands, you might enter into a rabbit hole where you may wonder how you reached the current state and — more importantly — how to recreate this state. So, I encourage you to use kubectl apply for initial deployment and subsequent updates, and use kubectl delete for objects that need to be removed. Going forward, it would be best to use a package manager like Helm or others.

Get the most out of Labels

Labels are used to organize Kubernetes resources. They are key-value pairs that can be used to identify or group the resources in Kubernetes. You can label Kubernetes-native resources as well as Custom Resources.

Labels are very powerful because they provide open-ended, entirely user-defined metadata to Kubernetes resources. Kubernetes uses this principle inherently; it’s an intrinsic capability and not an after-thought bolt-on. Some labels required for every deployment resource:

- application

- version

- release

- stage

metadata:

labels:

app: my-app

version: "v31"

release: "r42"

stage: production

However, labels do not provide uniqueness. In general, we can say that objects can have labels associated. Label selectors are core grouping primitive in Kubernetes and are used to link objects together.

apiVersion: v1

kind: Service

metadata:

name: service-p

namespace: awsome-project

labels:

approval: production

spec:

selector:

app: my-app

type: NodePort

Use Deployments

Use Deployments all the time, even when you’re deploying your first single Nginx pod. Deployments are “First Class” travel for the price of Coach; you can fall-into rolling deployments, you make a mistake, re-apply, and Kubernetes takes care of killing the naughty pods and replacing them with well-behaved ones.

They are the upgraded and higher version of the replication controller. They manage the deployment of replica sets, which is also an upgraded version of the replication controller. They can update the replica-set and are also capable of rolling back to the previous version.

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: microservice-y

namespace: awsome-project

spec:

replicas: 100

template:

metadata:

labels:

app: publicname-a

language: golang

spec:

containers:

- name: grpc-proxy

image: gcr.io/our-project/grpc-proxy-image:tag-m

Define Resource Quotas

An option of running resource-unbound containers puts your system at risk of DoS or “noisy neighbor” scenarios. To prevent and minimize those risks, you should define resource quotas. By default, all resources in the Kubernetes cluster are created with unbounded CPU and memory requests/limits. You can create resource quota policies, attached to Kubernetes namespace, to limit the CPU and memory a pod is allowed to consume.

The following is an example for namespace resource quota definition that will limit the number of pods in the namespace to 4, limiting their CPU requests between 1 and 2 and memory requests between 1GB to 2GB.

apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-resources

spec:

hard:

pods: "4"

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

Log Everything

Application and systems logs can help you understand what is happening inside your cluster. The logs are particularly useful for debugging problems and monitoring cluster activity. Kubernetes supplies cluster-based logging, allowing to log container activity into a central log hub. When a cluster is created, the standard output and standard error output of each container can be ingested using a Fluentd agent running on each node into either your cloud-provider Stackdriver Logging or into Elasticsearch and viewed with Kibana.

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: busybox

args: [/bin/sh, -c,

'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done']

The above is an example of basic logging in Kubernetes that outputs data to the standard output stream. This demonstration uses a pod specification with a container that writes some text to standard output once per second.